The concept of autonomous vehicles (AVs) is one of the most thrilling and altering innovations of the century. Whether it is self-driving taxis or bots delivering your food, such systems operate on artificial intelligence to make sense of the surrounding world. Behind this intelligence is perhaps one of the most critical, and least understood processes: advanced image annotation. So how does it achieve it and why is it so vital?

What is Image Annotation?

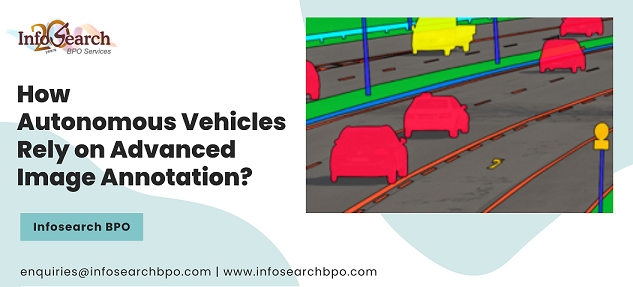

Image annotation is the practice of tagging images to train AI models that dictate ways of identifying objects, people and situations. In case of autonomous vehicles, this includes tagging such things as:

- Pedestrians

- Cars (vans, bikes, busses)

- Road signs

- Traffic signs

- Lane markings

- Stresses or dangers

These annotated pictures are used as training sets of computer vision algorithms to enable the vehicle to see and make safe choices in real time.

The significance of Autonomous Driving

Arrayed cars are based on an uninterrupted flow of image data using cameras and sensors. In the absence of proper labeling of training, the AI model will have no idea of what it is viewing. The quality of the image annotation would allow:

- Object detection

- Lane following

- Following of traffic rules

- A collision avoidance

The AV overrides the stop sign and the child crossing the road, because thousands of such situations were labeled and taught the model in advance.

Image Annotation Types in Autonomous Vehicles

o Applied as a protection over the cars, people, and items.

o Aids in recognition and monitoring of moving objects on the road.

o Is a labeling of each pixel of an image (e.g. road, sidewalk, sky).

o Provides an in-depth environmental knowledge.

- Instance Segmentation

o Making a distinction between specific objects of the identical category (e.g., two different cars).

o Adds detail by shading 3 dimensional boxes around objects.

o Critical to spatial awareness/ distance estimation.

o Marks certain landmarks of the objects (e.g. joints of a human being).

o Considered beneficial in the estimation of the pedestrian motion and position.

The performance and safety benefits of annotation

The closer the annotations the better the model. False detections and hazardous decisions are caused by poor annotation. For example:

- Accidents may result due to mislabeling by assigning a pedestrian a sign.

- Shouldering of lanes might come about because of inconsistent marking of lanes, which may result in drifting of the car.

Annotated data is a high-quality data that enhances not only the safety, but efficiency, comfort and trust of people in autonomous technology.

Human-in-the-loop Automation

Most of the work is now still done manually as often as possible to assure quality, however, tools such as AI-assisted labeling and automatic annotation are speeding the process along. A hybrid enables:

- Rapid production of datasets

- Greater precision

- Model refinement and development from new driving scenarios on an ongoing basis

Final Thoughts

The autonomous vehicle perception relies on advanced image annotation. The autonomous vehicles would not be able to learn and navigate unless there were accurately labeling images available. As AV technology is changing, so will be the annotation tools and techniques behind the technology.

Self-driving cars perceive their world in terms of the machine learned-but it is learned using intelligent image annotation.

Contact Infosearch for your outsourced annotation services.

Recent Comments